Large Language Models (LLMs) are shaping the future of artificial intelligence. From powering chatbots and content tools to enabling intelligent assistants and code generators, these models are becoming the foundation of modern AI applications. But what exactly are LLMs, and how can you create one yourself?

This blog will walk you through everything you need to know about LLMs—from how they work to how you can build a simplified version using open-source tools.

What Is a Large Language Model?

A Large Language Model (LLM) is a type of artificial intelligence trained to understand and generate human language. These models are built using neural networks, particularly transformers, and are trained on large-scale text datasets like books, articles, and websites.

Popular LLMs include:

They are capable of performing tasks such as:

- Text generation

- Language translation

- Question answering

- Summarization

- Code generation

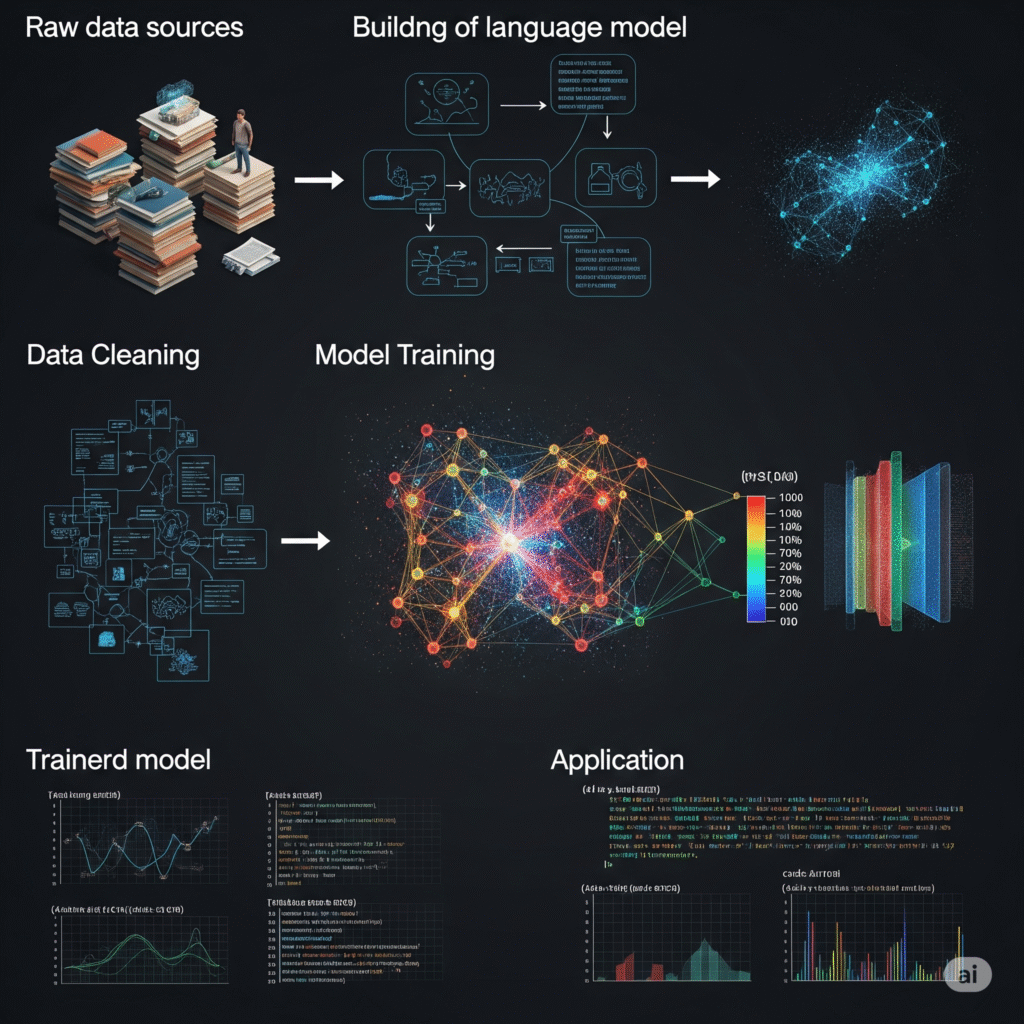

How Do LLMs Work?

LLMs rely on the transformer architecture, which uses attention mechanisms to process words in relation to one another in a sequence. During training, the model learns how words and phrases relate to each other by predicting the next word in a sentence.

This is done through:

- Pretraining – Reading massive amounts of text to learn language structure.

- Fine-tuning – Adjusting the model for specific tasks using labeled datasets.

These models require significant compute power, often using GPUs or TPUs, but smaller models can be fine-tuned with limited resources.

How to Build a Mini LLM

If you’re interested in building your own LLM, you don’t have to start from scratch. With open-source tools and accessible datasets, you can build and fine-tune a basic language model for experimentation or custom applications.

Step 1: Learn the Fundamentals

Start with:

- Python programming

- Basics of machine learning and deep learning

- Natural Language Processing (NLP) concepts

- Transformer architecture (Illustrated Guide)

Step 2: Gather Text Data

Use publicly available datasets such as:

Make sure to clean and preprocess the data before training.

Step 3: Preprocess the Data

Tokenization is the process of converting text into tokens (numbers). You can use:

Clean data is crucial for performance—remove special characters, HTML tags, and non-relevant content.

Step 4: Fine-Tune a Pretrained Model

Use a smaller pretrained model like GPT-2 or DistilGPT-2 via Hugging Face Transformers:

pip install transformers datasets

from transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")

Train using the Hugging Face Trainer class or a custom training loop. You can train models even with free GPU access using Google Colab.

Step 5: Deploy the Model

You can deploy your model as a web app using:

- Gradio

- Streamlit

- Flask/FastAPI for backend

- Hugging Face Spaces for free public hosting

SEO-Friendly External Backlinks to Use

Here are some high-authority backlinks you can include in your article or resource page to boost topical authority:

- https://openai.com/research/gpt-4

- https://huggingface.co/transformers/

- https://colab.research.google.com/

- https://jalammar.github.io/illustrated-transformer/

- https://github.com/google/sentencepiece

- https://spacy.io/

These links help boost credibility in Google’s eyes and are useful for readers exploring LLMs.

Real-World Applications of LLMs

LLMs are powering:

- Chatbots and customer support automation

- Blog and content generation tools

- Email writing assistants

- Coding companions like GitHub Copilot

- Language translation systems

- Legal and medical text summarizers

- Cybersecurity tools for detecting phishing and spam

The potential use cases are expanding daily as more developers and companies experiment with fine-tuning these models for domain-specific applications.

Final Thoughts

LLMs represent a massive leap forward in artificial intelligence. Whether you’re a developer, researcher, or tech enthusiast, understanding and experimenting with these models can give you a major edge in the AI space.

With open-source tools and publicly available data, building your own language model has never been more accessible. Start small, stay curious, and explore the frontier of human-like language generation.

For more blogs like this, keep following Technaukary — your hub for practical tech guides, AI news, and project tutorials.